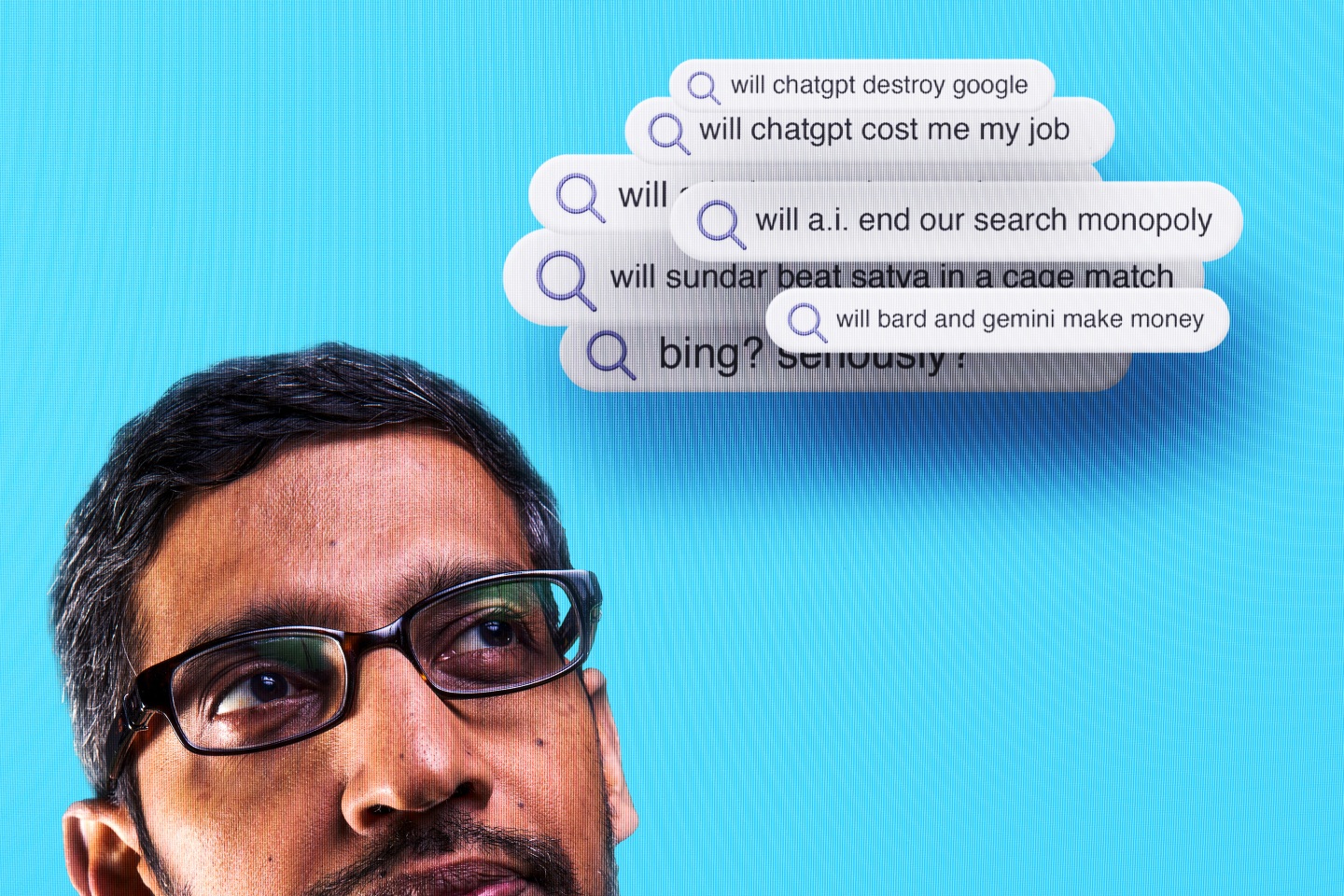

Inside Google’s scramble to reinvent its $160 billion search business—and survive the A.I. revolution

Sundar Pichai, CEO of Alphabet, parent company of Google, stands onstage in front of a packed house at the Shoreline Amphitheatre in Mountain View, Calif. He’s doing his best interpretation of a role pioneered by Steve Jobs and Bill Gates: the tech CEO as part pop idol, part tent-revival preacher, deliverer of divine revelation, not in song or sermon but in software and silicon. Except the soft-spoken, introverted Pichai is not a natural for the role: Somehow his vibe is more high school musical than Hollywood Bowl.

Pichai declared Google to be an “A.I.-first” company way back in 2016. Now A.I. is having a major moment—but a Google rival is grabbing all the attention. The November debut of ChatGPT caught Google off guard, setting off a frantic six months in which it scrambled to match the generative A.I. offerings being rolled out by ChatGPT creator OpenAI and its partner and backer, Microsoft.

Here, at the company’s huge annual I/O developer conference in May, Pichai wants to show off what Google built in those six months. He reveals a new Gmail feature called Help Me Write, which automatically drafts whole emails based on a text prompt; an A.I.-powered immersive view in Google Maps that builds a realistic 3D preview of a user’s route; generative A.I. photo editing tools; and much more. He talks about the powerful PaLM 2 large language model (LLM) that underpins much of this technology—including Bard, Google’s ChatGPT competitor. And he mentions a powerful family of A.I. models under development, called Gemini, that could immensely expand A.I.’s impact—and its risks.

But Pichai dances around the topic that so many in the audience, and watching from around the world on a livestream, most want to hear about: What’s the plan for Search? Search, after all, is Google’s first and foremost product, driving more than $160 billion in revenue last year—about 60% of Alphabet’s total. Now that A.I. chatbots can deliver information from across the internet, not as a list of links but in conversational prose, what happens to this profit machine?

The CEO barely flicks at the issue at the top of his keynote. “With a bold and responsible approach, we are reimagining all our core products, including Search,” Pichai says. It’s an oddly muted way to introduce the product on which the fate of his company—and his legacy—may depend. You can sense the audience’s impatience to hear more in every round of tepid, polite applause Pichai receives for the rest of his address.

But Pichai never returns to the topic. Instead, he leaves it to Cathy Edwards, Google’s vice president of Search, to explain what the company calls, awkwardly, “search generative experience,” or SGE. A combination of search and generative A.I., it returns a single, summarized “snapshot” answer to a user’s search, along with links to websites that corroborate it. Users can ask follow-up questions, much as they would with a chatbot.

It’s a potentially impressive answer generator. But will it generate revenue? That question is at the heart of Google’s innovator’s dilemma.

Alphabet says SGE is “an experiment.” But Pichai has made clear that SGE or something a lot like it will play a key role in Search’s future. “These are going to be part of the mainstream search experience,” the CEO told Bloomberg in June. The technology is certainly not there yet. SGE is relatively slow, and like all generative A.I., it’s prone to a phenomenon computer scientists call “hallucination,” confidently delivering invented information. That can be dangerous in a search engine, as Pichai readily acknowledges. If a parent googles Tylenol dosage for their child, as he told Bloomberg, “there’s no room to get that wrong.”

SGE’s arrival is an indication of just how quickly Google has bounced back in the A.I. arms race. The tech draws on Google’s decades of experience in A.I. and search, demonstrating how much firepower Alphabet can bring to bear. But it also exposes Alphabet’s vulnerability in this moment of profound change. Chatbot-style information gathering threatens to cannibalize Google’s traditional Search and its incredibly lucrative advertising-driven business model. Ominously, many people prefer ChatGPT’s answers to Google’s familiar list of links. “Search as we know it will disappear,” predicts Jay Pattisall, an analyst at research firm Forrester.

So it’s not just Tylenol doses that Pichai and Alphabet can’t afford to botch. Google has the tools to be great at A.I.; what it doesn’t have, yet, is a strategy that comes anywhere near matching the ad revenue that turned Alphabet into the world’s 17th-biggest company. How Google plays this transition will determine whether it will survive, as both a verb and a company, well into the next decade.

When ChatGPT arrived, some commentators compared its significance to the debut of the iPhone or the personal computer; others took bigger swings and placed the chatbot alongside electric motors or the printing press. But to many executives, money managers, and technologists, one thing was obvious from the start: ChatGPT is a dagger pointed straight at Alphabet’s heart. Within hours of ChatGPT’s debut, users playing with the new chatbot declared it “a Google killer.”

Although ChatGPT itself did not have access to the internet, many observers correctly speculated that it would be relatively easy to give A.I.-powered chatbots access to a search engine to help inform their responses. For many queries, ChatGPT’s unified response seemed better than having to wade through multiple links to cobble together information. Plus, the chatbot could write code, compose haikus, craft high school history papers, create marketing plans, and offer life coaching. A Google search can’t do that.

Microsoft—which has invested $13 billion into OpenAI so far—almost immediately moved to integrate OpenAI’s technology into its also-ran search engine, Bing, which had never achieved more than 3% market share. The integration, commentators thought, might give Bing its best shot at knocking Google Search from its pedestal. Satya Nadella, Microsoft’s CEO, quipped that Google was “the 800-pound gorilla” of search, adding, “I want people to know that we made them dance.”

Nadella actually had more faith in his competitor’s dancing skills than some commentators, who seemed to think Google was too bureaucratic and sluggish to boogie. Google’s world-class A.I. team had long been the envy of the tech community. Indeed, in 2017, Google researchers had invented the basic algorithmic design underpinning the entire generative A.I. boom, a kind of artificial neural network called a transformer. (The T in ChatGPT stands for “transformer.”) But Alphabet didn’t seem to know how to turn that research into products that fired the public imagination. Google had actually created a powerful chatbot called LaMDA in 2021. LaMDA’s dialogue skills were superlative. But its responses, like those from any LLM, can be inaccurate, exhibit bias, or just be bizarre and disturbing. Until those issues were resolved—and the A.I. community is far from resolving them—Google feared that releasing it would be irresponsible and pose a reputational risk.

Perhaps as important, there was no obvious way that a chatbot fit with Google’s primary business model—advertising. Compared with Google Search, a summarized answer or a dialogue thread seemed to provide far less opportunity for advertising placement or sponsored links.

To many, that conflict exposed deeper cultural impediments. Google, according to some former employees, has grown too comfortable with its market dominance, too complacent and bureaucratic, to respond to as fast-moving a shift as generative A.I. Entrepreneur Praveen Seshadri joined Google after the company acquired his startup AppSheet in 2020. Shortly after leaving earlier this year, he wrote a blog post in which he said that the company had four core problems: no mission, no urgency, delusions of exceptionalism, and mismanagement. All of these, he said, were “the natural consequences of having a money-printing machine called ‘Ads’ that has kept growing relentlessly every year, hiding all other sins.”

Four other former employees who have left Google in the past two years characterized the culture similarly. (They spoke to Fortune on the condition their names not be used, for fear of violating separation agreements or damaging their career prospects.) “The amount of red tape you would have to wade through just to improve an existing feature, let alone a new product, was mind-boggling,” one said. Another said Google often used the massive scale of its user base and revenues as an excuse not to embrace new ideas. “They set the bar so high in terms of impact that almost nothing could ever clear it,” another said.

Such insider discontent only fueled the broader narrative: Google was toast. In the five weeks between ChatGPT’s release and New Year’s Day, Alphabet’s stock dropped 12%.

By mid-December, there were signs of panic inside the Googleplex. The New York Times reported that Alphabet had declared “a code red” to catch OpenAI and Microsoft. Google’s cofounders, Larry Page and Sergey Brin, who stepped away from day-to-day responsibilities in 2019—but exercise majority control over the company’s shares through a super-voting class of stock—were suddenly back, with Brin reportedly rolling up his sleeves and helping to write code.

It was hard to interpret the cofounders’ return as a ringing endorsement of Pichai’s leadership. But Google executives frame Page and Brin’s renewed presence—and indeed the whole recent scramble—as driven by enthusiasm rather than alarm. “You have to remember, both Larry and Sergey are computer scientists,” says Kent Walker, Alphabet’s president of global affairs, who oversees the company’s content policies and its responsible innovation team, among other duties. “Larry and Sergey are excited about the possibility.” For his part, Pichai later told a Times podcast that he never instituted a “code red.” He did, however, say he was “asking teams to move with urgency” to figure out how to translate generative A.I. into “deep, meaningful experiences.”

Those urgings clearly had an effect. In February, Google announced Bard, its ChatGPT competitor. By March, it had previewed the writing assistant functions for Workspace, as well as its Vertex A.I. environment, which helps its cloud customers train and run generative A.I. applications on their own data. At I/O in May, it seemed almost every Google product was getting a shiny new generative-A.I. gloss. Some investors were impressed. The company’s “speed of innovation and go-to-market motion are improving,” Morgan Stanley analysts wrote immediately after I/O. Google’s stock, which sank as low as $88 per share in the wake of ChatGPT’s release, was trading above $122 by the time Pichai hit the stage in Mountain View.

But doubts persist. “Google has a lot of embedded advantages,” says Richard Kramer, founder of equity research firm Arete Research, noting its unrivaled A.I. research output and access to some of the world’s most advanced data centers. “They’re just not pursuing them as aggressively commercially as they could be.” Its divisions and product teams are too siloed, he adds, making it difficult to collaborate across the company. (So far, the most visible change to Google’s organizational structure that Pichai has made amid the A.I. upheaval has been merging the company’s two advanced A.I. efforts, Mountain View–based Google Brain and London-based DeepMind, into an entity called Google DeepMind.)

Arete analysts aren’t the only ones who think Google is falling short of its potential. Morgan Stanley noted that, despite the recent recovery, Alphabet suffers from “a valuation gap.” Its shares have historically traded at a premium to other Big Tech companies such as Apple, Meta, and Microsoft, but as of July, they were trading at a price/earnings multiple about 23% below those rivals. To many, that’s a clue that the market doesn’t believe Google can shrug off its A.I. malaise.

Jack Krawczyk, 38, is a boomerang Googler. He joined the company in his twenties, then left in 2011 to work at a startup and, later, at streaming radio service Pandora and WeWork. He came back in 2020 to work on Google Assistant, Google’s answer to Apple’s Siri and Amazon’s Alexa.

Google’s LaMDA chatbot fascinated Krawczyk, who wondered if it could improve Assistant’s functionality. “I know I couldn’t shut up about it for most of 2022, if not 2021,” he says. What held the idea back, Krawczyk tells me, was reliability—that persistent problem of “hallucination.” Would users be okay with answers that sounded confident but were wrong?

“We were waiting for a moment where we got a signal to say, ‘I’m ready for an interaction that feels very convincing,’ ” says Krawczyk. “We started to see those signals” last fall, he says, coyly not mentioning that they included the giant flashing billboard of ChatGPT’s popularity.

Today Krawczyk is senior director of product on the Bard team. Although it drew on research Google had been developing for years, Bard was built fast following ChatGPT’s launch. The new chatbot was unveiled on Feb. 6, just days ahead of Microsoft’s debut of Bing Chat. The company won’t reveal how many people worked on the project. But some indications of the pressure the company was under have emerged.

One of the secrets to ChatGPT’s fluent responses is that they’re fine-tuned via a process called reinforcement learning through human feedback (RLHF). The idea is that humans rate a chatbot’s responses and the A.I. learns to tailor its output to better resemble the responses that get the best ratings. The more dialogues a company can train on, the better the chatbot is likely to be.

With ChatGPT having reached 100 million users in just two months, OpenAI had a big head start in those dialogues. To play catch-up, Google employed contract evaluators. Some of these contractors, who worked for outsourcing firm Appen, later filed a complaint with the National Labor Relations Board, saying they had been fired for speaking out about low pay and unreasonable deadlines. One told the Washington Post that raters were given as little as five minutes to evaluate detailed answers from Bard on such complex topics as the origins of the Civil War. The contractors said they feared the time pressure would lead to flawed ratings and make Bard unsafe. Google has said the matter is between Appen and its employees and that the ratings are just one data point among many used to train and test Bard; the training continues apace. Other reports have claimed that Google tried to bootstrap Bard’s training by using answers from its rival, ChatGPT, that users had posted to a website called ShareGPT. Google denies using such data for training.

Unlike the new Bing, Bard was not designed to be a search tool, even though it can provide links to relevant internet sites. Bard’s purpose, Krawczyk says, is to serve as “a creative collaborator.” In his telling, Bard is primarily about retrieving ideas from your own mind. “It’s about taking that piece of information, that sort of abstract concept that you have in your head, and expanding it,” he says. “It’s about augmenting your imagination.” Google Search, Krawczyk says, is like a telescope; Bard is like a mirror.

“It’s about taking that piece of information, that sort of abstract concept that you have in your head, and expanding it. It’s about augmenting your imagination.”

Jack Krawczyk, senior director of product on the Bard team

Exactly what people are seeing in Bard’s mirror is hard to say. The chatbot’s debut was rocky: In the blog post announcing Bard, an accompanying screenshot of its output included an erroneous statement that the James Webb Space Telescope, launched in 2021, took the first pictures of a planet outside our solar system. (In fact, an Earth-based telescope achieved that feat in 2004.) It turned out to be a $100 billion mistake: That’s how much market value Alphabet lost in the 48 hours after journalists reported the error. Meanwhile, Google has warned its own staff not to put too much faith in Bard: In June it issued a memo reminding employees not to rely on coding suggestions from Bard or other chatbots without careful review.

Since Bard’s debut, Google has upgraded the A.I. powering the chatbot to its PaLM 2 LLM. According to testing Google has published, PaLM 2 outperforms OpenAI’s top model, GPT-4, on some reasoning, mathematical, and translation benchmarks. (Some independent evaluators have not been able to replicate those results.) Google also made changes that greatly improved Bard’s responses to math and coding queries. Krawczyk says that some of these changes have reduced Bard’s tendency to hallucinate, but that hallucination is far from solved. “There’s no best practice that is going to yield ‘x,’ ” he says. “It’s why Bard launched as an experiment.”

Google declined to reveal how many users Bard has. But third-party data offers signs of progress: Bard website visits increased from about 50 million in April to 142.6 million in June, according to Similarweb. That trails far behind ChatGPT’s 1.8 billion visits the same month. (In July, Google rolled Bard out to the European Union and Brazil and expanded its responses to cover 35 additional languages, including Chinese, Hindi, and Spanish.) Those numbers in turn pale beside those for Google’s main search engine, with 88 billion monthly visits and 8.5 billion daily search queries. Since the launch of Bing Chat, Google’s search market share has increased slightly, to 93.1%, while Bing’s is essentially unchanged at 2.8%, per data from StatCounter.

Bing is far from the biggest threat A.I. poses to search. In a survey of 650 people in the U.S. in May, conducted by Bloomberg Intelligence, 60% of those between ages 16 and 34 said they preferred asking ChatGPT questions to using Google Search. “The younger age group may help drive a permanent shift in how search is used online,” says Mandeep Singh, senior technology analyst at Bloomberg Intelligence.

That’s where SGE comes in. Google’s new generative A.I. tool allows users to find answers to more complex, multistep queries than they might have been able to with a traditional Google Search, according to Elizabeth Reid, Google’s vice president of Search.

There are plenty of kinks to work out—especially around speed. While Google Search returns results instantly, users have to wait frustratingly long seconds for SGE’s snapshot. “Part of the technology fun is working on the latency,” Reid told me, sardonically, during a demo before I/O. In a later interview, she said Google had made progress on speed, and noted that users might tolerate a brief delay before getting a clear answer from SGE, rather than spending 10 minutes clicking through multiple links to puzzle out an answer on their own.

Users have also caught SGE engaging in plagiarism—lifting answers verbatim from websites, and then not providing a link to the original source. That reflects a problem endemic to generative A.I. “What’s inherently tricky about the technology is it doesn’t actually always know where it knows things from,” Reid says. Google says it’s continuing to learn about SGE’s strengths and weaknesses, and to make improvements.

The biggest issue is that Google doesn’t know if it can make as much money from ads around generative A.I. content as it has from traditional Search. “We are continuing to experiment with ads,” Reid says. This includes placing ads in different positions around the SGE page, as well as what Reid calls opportunities for “native” ads built into the snapshot answer—although Google will have to figure out how to make clear to users that a given portion of a response is paid for. Reid also said Google was thinking about how to add additional “exits” throughout the SGE page, providing more opportunities for people to link out to third-party websites.

The solution to that “exit” problem is of vital interest to publishers and advertisers who depend on Google’s search results to drive traffic to their sites—and who are already freaking out. With snapshot answers, people may be far less likely to click through on links. News publishers are particularly incensed: With its current LLM approach, Google essentially scrapes information from their sites, without compensation, and uses that data to build A.I. that may destroy their business. Many large news organizations have begun negotiations, seeking millions of dollars per year to grant Google access to their content. In July, the Associated Press became the first news organization to sign a deal of this kind with OpenAI, although financial terms were not disclosed. (Jordi Ribas, Microsoft’s head of search, told the audience at the Fortune Brainstorm Tech conference in July that the company’s own data shows that users of Bing Chat are more likely to click on links than users of a traditional Bing search.)

Of course, if people don’t click through on links, that also poses an existential threat to Alphabet itself. It remains far from clear that the business model that drives 80% of Google’s revenues—advertising—is the best fit for chatbots and assistants. OpenAI, for example, has chosen a subscription model for its ChatGPT Plus service, charging users $20 per month. Alphabet has many subscription businesses, from YouTube Premium to various features in its Fitbit wearables. But none are anywhere near as lucrative as advertising.

Nor has the company grown any of them as quickly. Google’s non-advertising revenue, excluding its Cloud service and “other bets” companies, grew just 3.5% in 2022, to $29 billion, while ad revenue leaped ahead at twice that rate, to $224 billion. It’s also not clear that Google could convert a meaningful mass of people accustomed to free internet searches to become paying subscribers. Another ominous finding of Bloomberg Intelligence’s A.I. survey is that most people of all ages, 93%, said they would not want to pay more than $10 per month for access to an A.I. chatbot.

If generative A.I. becomes a Search killer, where can Google look for growth? Its cloud business, for one, is likely to benefit. Google has long built its A.I. prowess into its cloud services, and analysts say the boom is perking up customer interest. Google was the only major cloud provider to gain market share in the past year, edging up to 11%. Google Cloud also turned a profit for the first time in the first quarter of 2023.

Still, Kramer of Arete Research notes that Google has a long way to go to catch its competitors. Amazon’s and Microsoft’s cloud offerings are both far bigger than Google’s and far more profitable. Plus, the A.I.-related competition is stiff: The ChatGPT buzz has led many business customers to seek out OpenAI’s LLM tech through Microsoft’s Azure Cloud.

More broadly, the generative A.I. moves Google has made so far have been mostly defensive, parries to the thrusts from OpenAI and Microsoft. To win the race for what comes next, Google will have to play offense. And many experts agree that what comes next is A.I. systems that don’t just generate content but take actions across the internet and operate software on behalf of a user. They will be “digital agents,” able to order groceries, book hotel rooms, and otherwise manage your life beyond the search page—Alexa or Siri on steroids.

“Whoever wins the personal agent, that’s the big thing, because you will never go to a search site again, you will never go to a productivity site, you’ll never go to Amazon again,” Bill Gates said in May. Gates said he’d be disappointed if Microsoft did not try to build an agent. He is also an investor in Inflection, a startup launched by DeepMind cofounder Mustafa Suleyman that says it aims to build everyone’s personal A.I. “chief of staff.”

Google has teased a forthcoming family of more powerful A.I. models called Gemini. Pichai has said Gemini will be “highly efficient at tool and API integrations,” a strong suggestion that it could power a digital agent. In another signal, Google’s DeepMind published research late in 2022 about an A.I. called Gato that experts see as a likely precursor to Gemini.

Krawczyk, from the Bard team, acknowledges the excitement around digital agents, but he notes that the assistant-to-agent transformation will require caution to manage within Google’s mandate to be “responsible.” After all, an agent that acts in the real world can cause more harm than a mere text generator. Compounding the problem, people tend to be poor at giving instructions. “We often don’t provide enough context,” Krawczyk says. “We want these things to be able to read our minds. But they can’t.”

Precisely because of such concerns, regulation will shape Google’s future. In late July, the White House announced that seven top A.I. companies, including Google, were voluntarily committing to several steps around public transparency, safety testing, and security of their A.I. models. But Congress and the Biden administration may well impose additional guardrails. In the E.U., an A.I. Act nearing completion may pose challenges for Alphabet, by requiring transparency around A.I. training data and compliance with strict data privacy laws. Walker, Google’s global affairs chief, has the unenviable task of navigating these currents. “The race should be for the best A.I. regulation, not the first A.I. regulation,” he says, hinting at the long slog ahead.

Walker is a fan of Shakespeare, and in preparing to interview him, I asked Bard whether there were analogies from the work of that other bard that might encapsulate Alphabet’s current innovator’s dilemma. Bard suggested Prospero, from The Tempest. Like Alphabet, Prospero had been the dominant force on his island, using magic to rule, much as Alphabet had used its supremacy in search and earlier forms of A.I. to dominate its realm. Then Prospero’s magic summoned a storm that washed rivals onto his island—and his world was upended. A pretty apt analogy, actually.

But when I ask Walker about Shakespearean parallels for the current moment, he instead quotes a line from Macbeth in which Banquo says to the three witches, “If you can look into the seeds of time,/And say which grain will grow and which will not,/Speak then to me, who neither beg nor fear/Your favors nor your hate.”

“That’s what A.I. does,” Walker says. “By looking at a million seeds, it can understand which ones are likely to grow and which ones are likely to not. So it’s a tool for helping us anticipate what might happen.”

But A.I. won’t be able to tell Walker or Pichai if Google has found a solution to the end of Search as we know it. For now, neither the bard nor Bard can answer that question.

This article appears in the August/September 2023 issue of Fortune with the headline, “Sundar Pichai and Google face their $160 billion dilemma.”